Parallel file systems

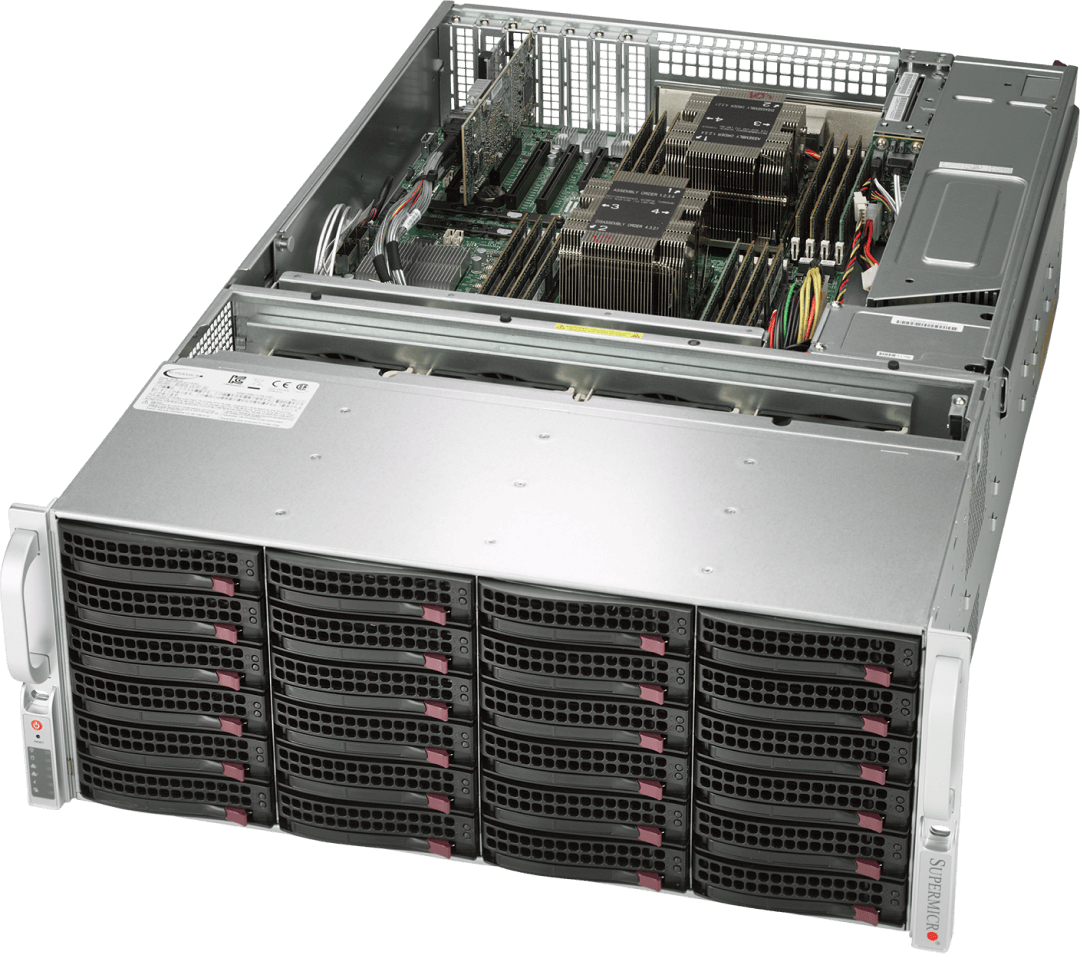

When your computational needs exceed your storage performance, it’s time to consider deploying a parallel file system.

With features like discrete metadata storage, multiple parallel read and write servers, native file system clients, and accelerated MPI-IO, a parallel file system provides the performance you need for HPC, big data, and AI/ML workloads.

An Aspen Systems BeeGFS cluster is a great fit for many HPC workloads. BeeGFS supports RDMA, MPI-IO, parallel access to multiple storage devices via multiple storage servers, and discrete NVMe-accelerated metadata storage.

If a different parallel file system is a better fit for your needs, Aspen Systems can also get you connected to the right people at one of our many storage partners so that you can be confident you’re getting the best solution for you, whether you need Lustre, Spectrum Scale / GPFS, Panasas, or Weka, we can coordinate with the right provider for the best configuration for your complete HPC environment. All the while, we’ll be there to deploy and support your entire, integrated HPC compute and storage environment with Aspen’s world-class customer service.

Cooling

Cooling