Intel® Gaudi® 3 Accelerator

A huge leap forward for generative AI, and even bigger for return on investment.

A huge leap forward for generative AI, and even bigger for return on investment.

Now with 24 200 Gigabit Ethernet ports for full-throttle training.

Intel’s Gaudi 3 accelerators offer enhanced performance, scalability, and efficiency, providing customers with a wider range of options. These accelerators will drive your training time down by over 200% at a lower cost per watt. Gain new access to valuable insights, foster innovation, and drive revenue growth.

As the demand for generative AI computing grows, so does the need for diverse solution options to meet customer preferences. The Intel Gaudi 3 AI accelerator is engineered for fast, efficient deep learning computation and scale. Key features include:

Engineered specifically for high-performance and high-efficiency Generation AI (GenAI) computing, the Intel Gaudi 3 accelerator boasts a dedicated compute engine. Each accelerator houses a heterogeneous compute engine featuring 64 AI-custom and programmable Tensor Processor Cores (TPCs) alongside eight Matrix Multiplication Engines (MMEs). Remarkably, each Intel Gaudi 3 MME is capable of executing an impressive 64,000 parallel operations, showcasing exceptional computational efficiency. This design excels in managing intricate matrix operations, fundamental to deep learning algorithms, thereby enhancing the speed and efficiency of parallel AI operations. Furthermore, the accelerator supports a variety of data types, including FP8 and BF16.

Addressing the burgeoning memory requirements of large GenAI datasets, each Intel Gaudi 3 accelerator is equipped with 128 gigabytes (GB) of HBMe2 memory capacity, offering a substantial 3.7 terabytes (TB) of memory bandwidth. Additionally, it incorporates 96 megabytes (MB) of on-board static random access memory (SRAM). These robust memory capabilities facilitate efficient processing of sizable GenAI datasets using fewer Intel Gaudi 3 accelerators. This feature proves particularly beneficial for handling large language and multimodal models, resulting in heightened workload performance and increased cost efficiency within data centers.

The system offers flexible and open-standard networking by integrating twenty-four 200-gigabit (Gb) Ethernet ports into each Intel Gaudi 3 accelerator. This setup enables seamless scalability to support expansive compute clusters while eliminating vendor lock-in associated with proprietary networking fabrics. Designed for efficient scaling, the Intel Gaudi 3 accelerator can effortlessly scale up and out from a single node to thousands, effectively meeting the diverse requirements of GenAI models.

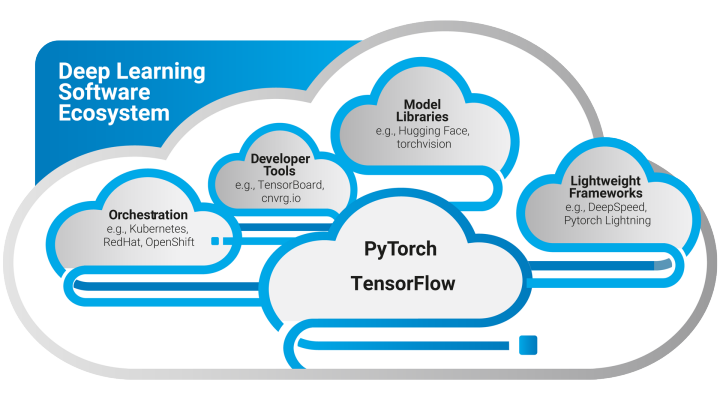

Intel Gaudi software integrates seamlessly with the PyTorch framework, providing access to optimized Hugging Face community-based models. Recognized as the predominant AI framework for GenAI developers, this integration allows for operations at a high abstraction level, promoting ease of use and productivity. Additionally, it facilitates hassle-free model porting across different hardware types, enhancing developer efficiency.

Joining the product lineup is the Gaudi 3 Peripheral Component Interconnect Express (PCIe) add-in card, designed to offer high efficiency with reduced power consumption. This new form factor, ideal for workloads such as fine-tuning, inference, and retrieval-augmented generation (RAG), features a full-height form factor at 600 watts. It has a memory capacity of 128GB and a bandwidth of 3.7TB per second, catering to diverse computing needs with optimal performance.

The Intel Gaudi 3 accelerator presents a formidable challenge to the longstanding performance leader in the industry, boasting remarkable speed and efficiency derived from its AI-centric design.

Its architecture is distinguished by heterogeneous compute engines, including eight matrix multiplication engines (MME) and 64 fully programmable Tensor Processor Cores (TPCs). Additionally, it supports a wide array of popular data types essential for deep learning, such as FP32, TF32, BF16, FP16, and FP8. For further insights into how the Intel Gaudi 3 accelerator architecture optimizes AI computing performance with enhanced efficiency.

The Intel Gaudi 3 accelerator is expected to provide substantial performance enhancements for training and inference tasks across prominent Generation AI (GenAI) models. Specifically, compared to Nvidia H100, the Intel Gaudi 3 accelerator is anticipated to achieve the following average improvements:

Outstanding networking capabilities begin at the processor level, as demonstrated by integrating 24200 Gigabit Ethernet ports directly onto the Intel Gaudi 3 accelerator chip.

This integration facilitates more efficient scaling within individual servers and enables extensive scaling across cluster-scale systems, facilitating rapid training and inference of models of varying sizes. The near-linear scalability of Intel Gaudi networking ensures that the cost-performance advantage is maintained, whether scaling out four nodes or four hundred. For further insights into scaling Intel Gaudi accelerators.

Intel Gaudi 3 AI accelerators provide advanced support for cutting-edge generative AI and large language models (LLMs) within data center environments. Paired with Xeon® Scalable Processors, the preferred host CPU for top-tier AI systems, they ensure robust enterprise-level performance and dependability.

The deep learning software ecosystem unites industry-leading software providers, tools, and code to expedite the development of cutting-edge deep learning models utilizing frameworks like PyTorch, TensorFlow, PyTorch Lightning, and DeepSpeed. Through collaborative efforts with esteemed software partners such as Hugging Face, Intel accelerates its support for the newest and most sought-after models, simplifying the development process to accommodate the ever-growing spectrum of deep learning applications emerging daily. Leveraging open-source code, models, and capabilities from trusted partners who share Intel’s commitment, the software team focuses on developing models for computer vision, natural language processing, generative AI, and multi-modal applications.

With over 500k AI models and more than 320k GitHub stars, Hugging Face offers a rich repository for exploration. Discover the Habana Optimum Library tailored for Intel Gaudi accelerators to further enhance your AI endeavors.

Simplify and accelerate deep learning optimization with DeepSpeed. Designed for ease of use, this software empowers scalability and speed, with a special emphasis on large-scale models.

Enhance the performance of PyTorch deep learning workloads using PyTorch Lightning. This tool streamlines processes to expedite development and deployment.

For customers adopting Intel Gaudi processor solutions, Cnvrg.io delivers robust MLOps support. Seamlessly integrate and manage machine learning operations to optimize performance and efficiency.

Our Value

As a leading HPC provider, Aspen Systems offers a standardized build and package selection that follows HPC best practices. However, unlike some other HPC vendors, we also provide you the opportunity to customize your cluster hardware and software with options and capabilities tuned to your specific needs and your environment. This is a more complex process than simply providing you a “canned” cluster, which might or might not best fit your needs. Many customers value us for our flexibility and engineering expertise, coming back again and again for upgrades to existing clusters or new clusters which mirror their current optimized solutions. Other customers value our standard cluster configuration to serve their HPC computing needs and purchase that option from us repeatedly. Call an Aspen Systems sales engineer today if you wish to procure a custom-built cluster built to your specifications.

Aspen Systems typically ships clusters to our customers as complete turn-key solutions, including full remote testing by you before the cluster is shipped. All a customer will need to do is unpack the racks, roll them into place, connect power and networking, and begin computing. Of course, our involvement doesn’t end when the system is delivered.

With decades of experience in the high-performance computing industry, Aspen Systems is uniquely qualified to provide unparalleled systems, infrastructure, and management support tailored to your unique needs. Built to the highest quality, customized to your needs, and fully integrated, our clusters provide many years of trouble-free computing for customers all over the world. We can handle all aspects of your HPC needs, including facility design or upgrades, supplemental cooling, power management, remote access solutions, software optimization, and many additional managed services.

Aspen Systems offers industry-leading support options. Our Standard Service Package is free of charge to every customer. We offer additional support packages, such as our future-proofing Flex Service or our fully managed Total Service package, along with many additional Add-on services! With our On-site services, we can come to you to fully integrate your new cluster into your existing infrastructure or perform other upgrades and changes you require. We also offer standard and custom Training packages for your administrators and your end-users or even informal customized, one-on-one assistance.

Aspen Systems is taking orders NOW!

The Intel Gaudi 3 accelerator is set to be released to original equipment manufacturers (OEMs) in the second quarter of 2024 featuring industry-standard configurations of Universal Baseboard and open accelerator module (OAM).

General availability of Intel Gaudi 3 accelerators is slated for the third quarter of 2024, with the Intel Gaudi 3 PCIe add-in card expected to be available fourth quarter of 2024.

Moreover, the Intel Gaudi 3 accelerator will power various cost-effective cloud infrastructures for large language model (LLM) training and inference, offering organizations price-performance advantages and a wider array of choices. Notably, organizations like NAVER will benefit from this offering.

Developers can initiate their journey today by accessing Intel Gaudi 2-based instances on the developer cloud, enabling them to learn, prototype, test, and execute applications and workloads efficiently.

Unlock the Power of AI with Aspen Systems Inc.

Our team of experienced sales engineers specializes in helping companies navigate the complex landscape of GPUs and accelerators to find the perfect fit for their unique business needs and AI initiatives. With a deep understanding of the latest technologies and market trends, we provide tailored solutions that maximize performance, efficiency, and ROI. Whether scaling up your AI infrastructure or optimizing HPC workflows, trust Aspen Systems to guide you toward the ideal GPU/accelerator solution, ensuring your business stays ahead in the rapidly evolving world of high-performance computing.