Introducing VDURA: Velocity Meets Durability

New Name, New Model, New Horizons

Transitioning from its beginnings as Panasas® to its evolution into VDURA, the software has consistently set the benchmark for reliability, availability, and simplicity in high-performance data storage and management. Now embracing a flexible Software as a Service (SaaS) model, VDURA leverages this strong foundation to provide an AI and HPC Data Platform that delivers top-tier performance, durability, and ease of use, all with exceptional economic benefits.

THE VDURA DATA PLATFORM

Propel future insights with the secure data platform for AI and HPC

The sole proven self-healing and self-managing Data Platform, VDURA, is designed to boost HPC and AI applications without data loss, downtime, or hassle.

The VDURA Data Platform represents the next step in the evolution of our cutting-edge parallel NAS technology, tailored to enhance AI and high-performance computing (HPC) data infrastructure with unmatched performance, durability, and simplicity. By integrating various storage media into a single, globally accessible framework, it provides customers with exceptional flexibility and cost-efficiency. Rooted in a history of innovation, the VDURA Data Platform ensures outstanding reliability and performance, driving the acceleration of next-generation workloads.

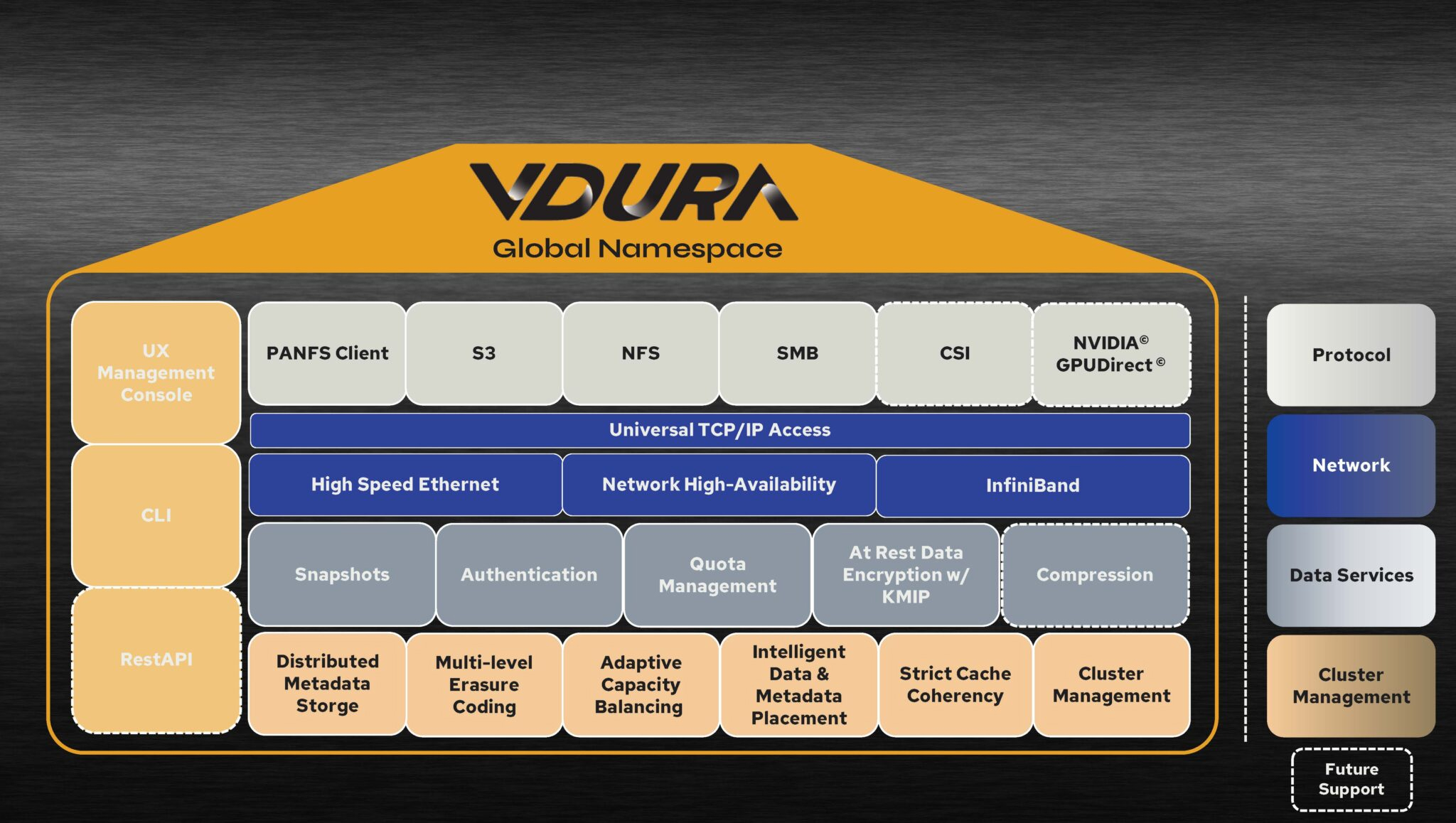

Platform Architecture Overview

A key distinguishing feature of VDURA’s data platform is its parallel architecture. This architecture integrates multiple storage types within a single platform, providing advanced data protection, automated data placement, and a unified management interface. Customers have the flexibility to utilize their managed software on flash-optimized servers, capacity-optimized enclosures, or in the cloud.

Platform Features

Boundless Performance and Capacity Scalability

The VDURA Data Platform offers limitless scalability in both performance and capacity, aligning with the agility and efficiency typical of hyperscaler environments. Its intelligent parallel storage architecture enhances data throughput and ensures efficient processing, effectively eliminating hotspots and bottlenecks. This enables VDURA to meet the extensive and demanding requirements of contemporary HPC and AI applications without sacrificing performance.

Versatile Integration and Configurable Flexibility

The VDURA Data Platform seamlessly incorporates various storage media within a unified architecture, allowing organizations to tailor storage solutions to specific performance, capacity, and cost requirements. This adaptable and balanced strategy ensures each deployment is optimized for present demands and future expansion, offering unparalleled flexibility across on-premises, hybrid, and other IT environments.

Established on a Robust and Secure Storage Foundation

The VDURA Data Platform, founded on a highly durable base, delivers exceptional data protection, integrity, and availability. With advanced erasure coding and encryption, it safeguards against data loss and corruption, while automated recovery and redundancy features ensure uninterrupted operation. This resilient architecture ensures reliability for essential HPC and AI workloads, keeping data secure and readily accessible.

Affordable and Simplified Data Management

Engineered for maximum efficiency, the VDURA Data Platform offers a turnkey solution that reduces management overhead. It allows even part-time IT administrators to effortlessly manage environments of any scale without the need for continual adjustments as workloads and data volumes fluctuate. This user-friendly design, combined with strategic resource optimization and the flexibility to incorporate various storage media into a single platform, greatly reduces the total cost of ownership.

OUR VALUE

DECADES OF SUCCESSFUL HPC DEPLOYMENTS

Architected For You

As a leading HPC provider, Aspen Systems offers a standardized build and package selection that follows HPC best practices. However, unlike some other HPC vendors, we also provide you the opportunity to customize your cluster hardware and software with options and capabilities tuned to your specific needs and your environment. This is a more complex process than simply providing you a “canned” cluster, which might or might not best fit your needs. Many customers value us for our flexibility and engineering expertise, coming back again and again for upgrades to existing clusters or new clusters which mirror their current optimized solutions. Other customers value our standard cluster configuration to serve their HPC computing needs and purchase that option from us repeatedly. Call an Aspen Systems sales engineer today if you wish to procure a custom-built cluster built to your specifications.

Solutions Ready To Go

Aspen Systems typically ships clusters to our customers as complete turn-key solutions, including full remote testing by you before the cluster is shipped. All a customer will need to do is unpack the racks, roll them into place, connect power and networking, and begin computing. Of course, our involvement doesn’t end when the system is delivered.

True Expertise

With decades of experience in the high-performance computing industry, Aspen Systems is uniquely qualified to provide unparalleled systems, infrastructure, and management support tailored to your unique needs. Built to the highest quality, customized to your needs, and fully integrated, our clusters provide many years of trouble-free computing for customers all over the world. We can handle all aspects of your HPC needs, including facility design or upgrades, supplemental cooling, power management, remote access solutions, software optimization, and many additional managed services.

Passionate Support, People Who Care

Aspen Systems offers industry-leading support options. Our Standard Service Package is free of charge to every customer. We offer additional support packages, such as our future-proofing Flex Service or our fully managed Total Service package, along with many additional Add-on services! With our On-site services, we can come to you to fully integrate your new cluster into your existing infrastructure or perform other upgrades and changes you require. We also offer standard and custom Training packages for your administrators and your end-users or even informal customized, one-on-one assistance.

Solutions By Industry

VDURA for ACADEMIA

Accelerate discoveries, enhance data reliability, and streamline research workflows with the VDURA Data Platform. This advanced platform boosts innovation in areas ranging from genomics research to cryogenic electron microscopy.

Learn MoreVDURA for ENERGY

Optimize exploration, accelerate production speeds, and achieve sustainability targets more rapidly with the VDURA Data Platform. This powerful platform converts complex data into actionable insights, reducing costs and enhancing efficiency.

Learn MoreVDURA for FEDERAL

Secure, streamline, and scale with VDURA. Our Data Platform provides unmatched data protection and efficiency, enabling federal agencies to manage mission-critical AI and HPC workloads with ease and precision.

Learn MoreVDURA for Academic Research

The straightforward data platform for the most intricate research.

Researchers in data-intensive fields such as genomics, molecular biology, and particle physics face a significant challenge: accessing and managing exponentially growing datasets with limited financial and personnel resources. VDURA addresses this issue by providing an intelligent data platform that meets their performance needs with unparalleled cost efficiency and ease of use.

With the VDURA Data Platform, small IT teams, multiple research groups, lean budgets, massive data volumes, and diverse workloads are no longer obstacles. Enhance productivity and foster groundbreaking insights with a fast data platform that is easy to manage, infinitely scalable, and exceptionally resilient.

VDURA for Energy

The data platform powering the future of energy.

With the global demand for energy increasing, companies are under immense pressure to scale production while meeting sustainability goals. Leveraging vast amounts of data provides energy companies with a significant advantage, making smart data infrastructure decisions crucial for success.

VDURA offers intelligent data solutions specifically designed for the energy sector’s most demanding high-performance computing (HPC) and AI applications. Whether conducting extensive seismic analyses, optimizing solar and battery systems, or developing sustainable biofuels, the VDURA Data Platform empowers organizations to extract maximum value from their data and maintain peak operational efficiency.

VDURA for FEDERAL

The data platform for mission-critical AI and HPC workloads

Federal agencies require a secure and robust data foundation to support their high-performance computing (HPC) and AI initiatives. Threats such as data loss, storage failures, and security breaches endanger mission-critical deadlines and, most importantly, national security.

The VDURA data platform overcomes these challenges with a superior reliability architecture that ensures exceptional durability and resiliency. Integrated safeguards protect against data loss, corruption, and service interruptions. By prioritizing maximum availability and rapid recovery, VDURA maintains mission-critical operations without compromising performance, making it the proven backbone for federal data infrastructure.

Cooling

Cooling