The World’s Fastest Parallel File System

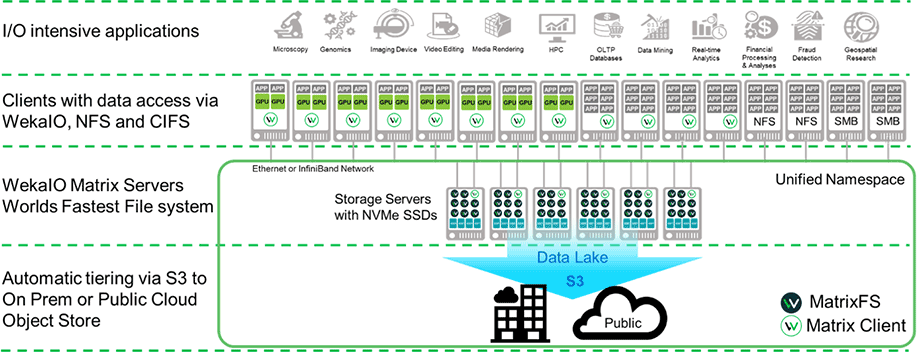

WekaIO Matrix is an NVMe-native, resilient, parallel, scalable file storage system. It supports GPU and CPU based clusters, and is designed for performance and scalability, being designed from the ground up to avoid common bottlenecks of traditional NAS systems. It offers all flash performance since it is the industry’s first flash-native parallel file system, and can deliver over 40 GBytes/second to a single GPU or CPU based server. Supporting up to 1024 individual file systems across a shared storage environment, and up to 6.4 billion files in a single directory.

Weka.IO Matrix Architecture

Speak with One of Our System Engineers Today

Advanced Data Protection and Security

Weka.IO has designed data distribution and data protection from scratch leveraging modern SSD technology rather than from legacy technology, and has created a new category of data protection with integrated intelligence that offers better protection than other data protection technologies, such as RAID or Erasure Coding, bypassing the associated overhead. It employs a distributed data protection system that can survive two or four simultaneous server failures, and its end-to-end data integrity provides protection against “silent” data corruption.

Instantaneous, per file system snapshots backup to object storage tier for portability and disaster recovery.

Your data can be encrypted at rest and on the fly, and access is controlled with common user management systems such as Active Directory and LDAP authentication/authorization for granular permissions/security.

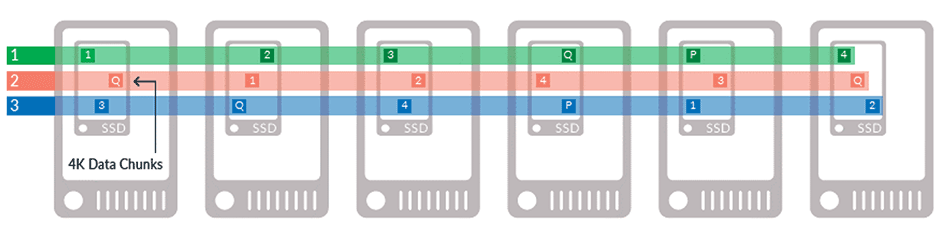

Matrix breaks a data stripe into chunks and writes each chunk to a different server, so in the event of a single server failure there can never be the possibility of a double fault. Even if the node has multiple SSDs, data is spread across different server nodes, with user-configurable resiliency.

When a failure occurs, regardless of how large the failure domain is defined, the system considers it a single failure. Matrix also utilizes a highly randomized data placement for improved performance and resiliency.

WekaIO stripes data across servers in definable stripe widths of between 4 and 16 data components and applies 2 or 4 parity components per stripe.

Support For Multiple Storage Mediums and Environments

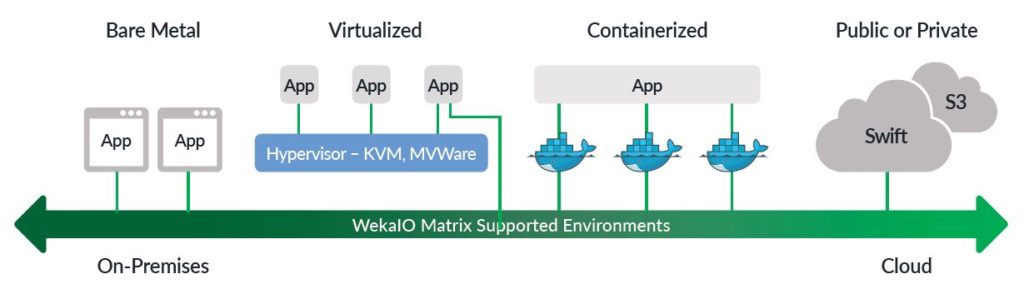

WekaIO Matrix software supports bare metal, containerized, virtual, and cloud environments and makes deployments easy, whether on NVMe, SAS or SATA storage. You can choose the environment best suited for your application.

Handle mixed I/O pattern workloads easily without the need for complex performance tuning. Data and metadata are fully distributed to ensure no data hot-spots. Matrix features integrated, automatic tiering between fast solid-state devices (NVME, SATA and SAS SSDs) and object storage (on premises or in the cloud), using multiple protocols, including POSIX, Clustered SMB and Clustered NFS.

Simplified Administration

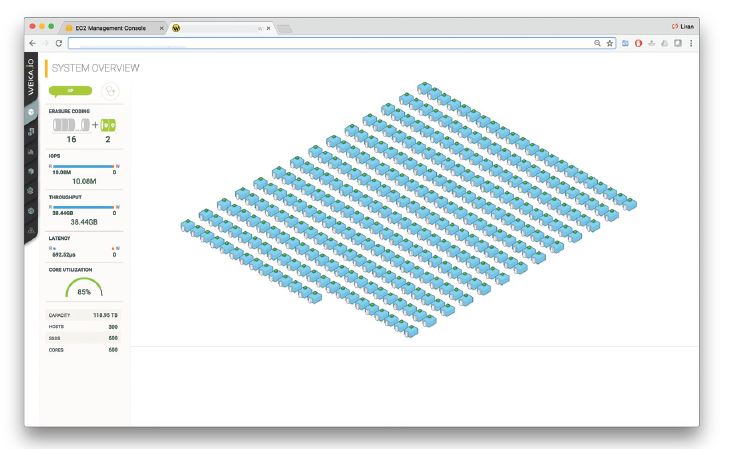

Using a simple management interface, the entire Matrix environment, with exabytes of data, can be managed be managed by a single administrator from a single location.

It is easy to install, easy to manage, provides proactive alerts in anticipation of system failures, and offers many different summary statistics and more detailed analytics of the system and its health.

Trinity

WekaIO’s Trinity software allows you to easily manage Matrix parallel file system storage, with three primary features (hence the name “Trinity”).

- System installation and configuration.

- Day-to-day system monitoring and management.

- Analytics for long term planning.

From the Interface, you can:

- View at-a-glance visualization of the health of the entire WekaIO cluster.

- View system throughput, latency and IOPS performance, and other key system statistics.

- Assess storage and CPU utilization.

- Monitor performance, server health, vital statistics, and important system events.

- Create a new file system.

- Add new nodes/expand the cluster.

- Connect to a cloud object storage tier.

- Create and configure dynamic data tier policy and set file system permissions.

Cooling

Cooling